AlignSDF: Pose-Aligned Signed Distance Fields for Hand-Object Reconstruction

|

|

|

|

|

|

|

|

|

Abstract

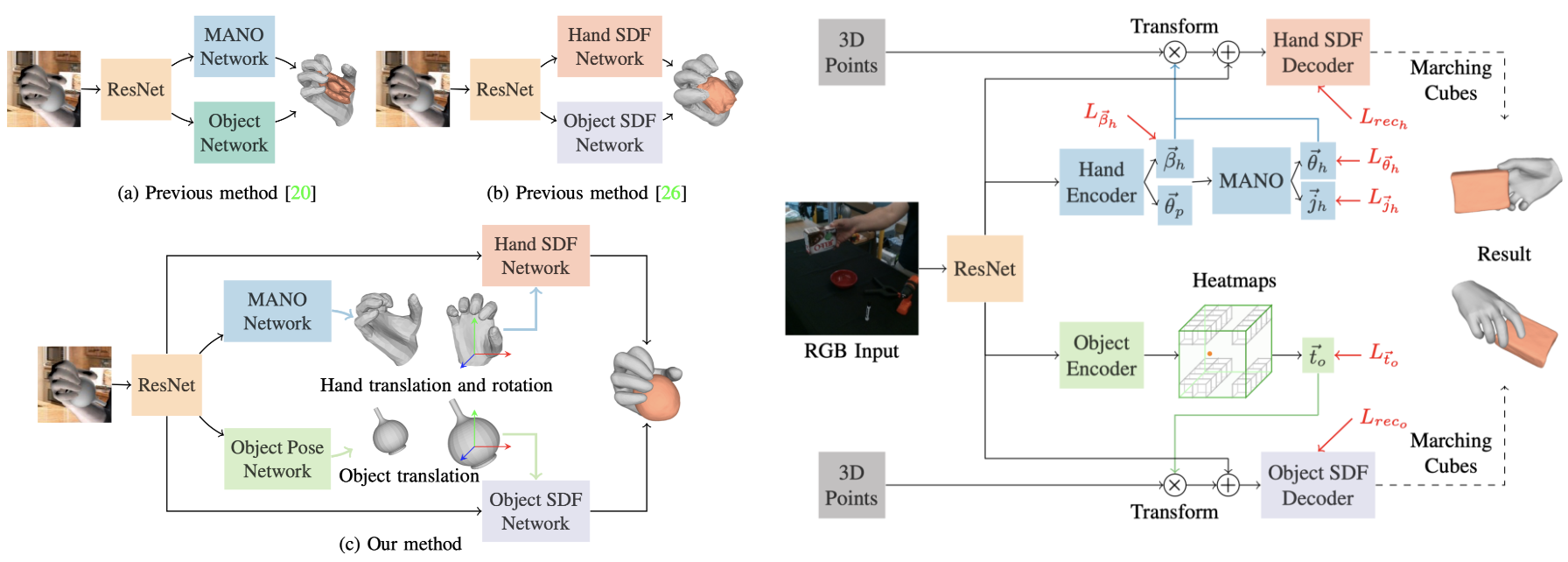

Recent work achieved impressive progress towards joint reconstruction of hands and manipulated objects from monocular color images. Existing methods focus on two alternative representations in terms of either parametric meshes or signed distance fields (SDFs). On one side, parametric models can benefit from prior knowledge at the cost of limited shape deformations and mesh resolutions. Mesh models, hence, may fail to precisely reconstruct details such as contact surfaces of hands and objects. SDF-based methods, on the other side, can represent arbitrary details but are lacking explicit priors. In this work we aim to improve SDF models using priors provided by parametric representations. In particular, we propose a joint learning framework that disentangles the pose and the shape. We obtain hand and object poses from parametric models and use them to align SDFs in 3D space. We show that such aligned SDFs better focus on reconstructing shape details and improve reconstruction accuracy both for hands and objects. We evaluate our method and demonstrate significant improvements over the state of the art on the challenging ObMan and DexYCB benchmarks.

Interactive 3D Demo

We present an interactive demo to show our reconstructed 3D model. Our model takes input the RGB image and generates the output by decoding predicted signed distance fields. Here are demo instructions:

|

|

|

|

|

|

Method

Our method. Our proposed method attempts to combine the advantages of parametric mesh models and SDFs. We use pose networks to estimate hand and object poses. Then, we transform sampled 3D points into their canonical counterparts using estimated poses. The SDF networks can focus on learning the geometry under the canonical hand or object poses.

BibTeX

@InProceedings{chen2022alignsdf,

author = {Chen, Zerui and Hasson, Yana and Schmid, Cordelia and Laptev, Ivan},

title = {{AlignSDF}: {Pose-Aligned} Signed Distance Fields for Hand-Object Reconstruction},

booktitle = {ECCV},

year = {2022},

}

Acknowledgements

This work was granted access to the HPC resources of IDRIS under the allocation AD011013147 made by GENCI. It was funded in part by the French government under management of Agence Nationale de la Recherche as part of the “Investissements d’avenir” program, reference ANR19-P3IA-0001 (PRAIRIE 3IA Institute) and by Louis Vuitton ENS Chair on Artificial Intelligence.

Some functions used to build the interactive 3D demo are stolen from Nilesh Kulkarni's DRDF webpage. Thanks to him for his great work.

Copyright

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright.